Recently, I gave a talk at the 2011 Open Hardware Summit. The program committee had requested that I prepare a “vision” talk, something that addresses open hardware issues 20-30 years out. These kinds of talks are notoriously difficult to get right, and I don’t really consider myself a vision guy; but I gave it my best shot. Fortunately, the talk was well-received, so I’m sharing the ideas here on my blog.

Currently, open hardware is a niche industry. In this post, I highlight the trends that have caused the hardware industry to favor large, closed businesses at the expense of small or individual innovators. However, looking 20-30 years into the future, I see a fundamental shift in trends that can tilt the balance of power to favor innovation over scale.

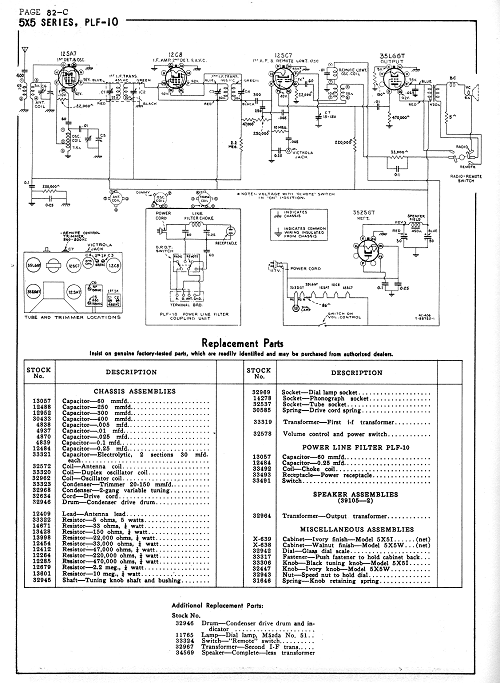

In the beginning, hardware was open. Early consumer electronic products, such as vacuum tube radios, often shipped with user manuals that contained full schematics, a list of replacement parts, and instructions for service. In the 80’s, computers often shipped with schematics. For example, the Apple II shipped with a reference manual that included a full schematic of the mainboard, an artifact that I credit as strongly influencing me to get into hardware. However, contemporary user manuals lack such depth of information; the most complex diagram in a recent Mac Pro user instructs on how to sit at the computer: “thighs slightly lifted”, “shoulders relaxed”, etc.

What happened? Did electronics just get too hard and complex? On the contrary, improving electronics got to easy: the pace of Moore’s Law has been too much for small-scale innovators to keep up.

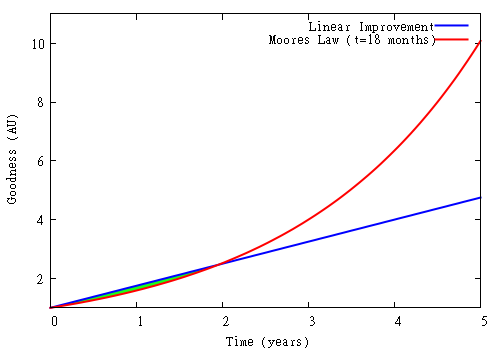

Consider this snapshot of Moore’s law, illustrating “goodness” (pick virtually any metric — performance, density, price-per-quanta) doubling every 18 months. This chart is unusual in that the vertical axis is linear. Most charts depicting Moore’s law use a logarithic vertical scale, which flattens the curve’s sharp upward trend into a much more innocuous looking straight line.

Above: Moore’s Law, doubling once every 18 months (red) versus linear improvement of 75% per year (blue). The small green sliver between the red and blue lines (found at t < 2yrs) represents the window of opportunity where linear improvement exceeds Moore's law. Note that the vertical axis on this graph is linear scale.

Plotted in blue is a line that represents a linear improvement over time. This might be representative of a small innovator working at a constant but respectable rate of 75% per year, non compounding, to add or improve features on a given platform. The tiny (almost invisible) green sliver between the curves represents the market opportunity of the small innovator versus Moore’s law.

The juxtaposition of these two curves highlights the central challenge facing small innovators over the past three decades. It has been more rewarding to “sit and wait” rather than innovate: if it takes two years to implement an innovation that doubles the performance of a system, one is better served by not trying and simply waiting and upgrading to the latest hardware two years later. It is a Sisyphean exercise to race against Moore’s Law.

This exponential growth mechanic favors large businesses with the resources to achieve huge scale. Instead of developing one product at a time, a competitive business must have the resources and hopefully the vision to develop 3 or 4 generations of products simultaneously. Furthermore, reaching the global market within the timespan of a single technology generation requires a supply chain and distribution channel that can do millions of units a month: consider that selling at a rate of 10,000 units per month would take 8 years to reach “only” a million users, or about 1% of the households in the US alone. And, significantly, the small barrier (a few months time) created by closing a design and forcing the competition to reverse engineer products can be an advantage, especially when contrasted to the pace of Moore’s law. Thus, technology markets have become progressively inaccessible to small innovators over the past three decades as individuals struggle to keep up with the technology treadmill, and big companies continue to close their designs to gain a thin edge on their competition.

However, this trend is changing.

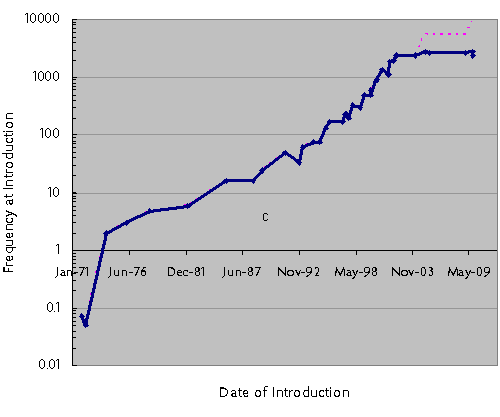

Below is a plot of Intel CPU clock speed at introduction versus time. There is an abrupt plateau in 2003 where clock speed stopped increasing. Since then, CPU makers have been using multi-core technology to drive performance (effective performance extrapolated as the pink dashed line), but this wasn’t by choice: certain physical limits were reached that prevented practical clock scaling (primarily related to power and wire delay scaling). Transistor density (and hence core count) continues to scale, but the pace is decelerating. In the 90’s, transistor count was doubling once every 18 months; today, it is probably slower than once every 24 months. Soon, transistor density scaling will slow to a pace of 36 months per generation, and eventually it will come to an effective stand-still. The absolute endpoint for transistor scaling is a topic of debate, but one study indicates that scaling may stop at around 5nm effective gate length sometime around 2020 or 2030 (H. Iwai, Microelectronics Engineering (2009), doi: 10.1016 / j.mee.2009.03.129). 5 nm is about the space between 10 silicon atoms, so even if this guess is wrong, it can’t be wrong by much.

The implications are profound. Someday, you cannot rely on buying a faster computer next year. Your phone won’t get any smaller or more powerful. And the flash drive you buy next year will cost the same yet store the same number of bits. The idea of an “heirloom laptop” may sound preposterous today, but someday we may perceive our computers as cherished and useful looms to hand down to our children as part of our legacy.

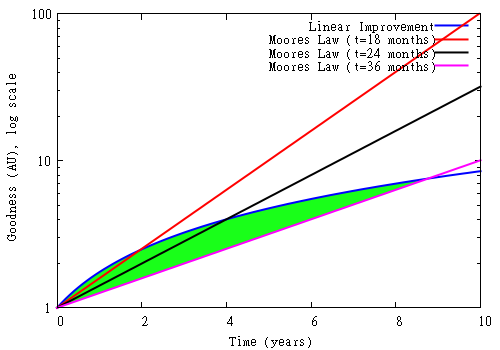

This slowing trend is good for small businesses, and likewise open hardware practices. To see why this is the case, let’s revisit the plot of Moore’s Law versus linear improvement, but this time overlay two new scenarios: technology doubling once every 24 and 36 months.

Above: Moore’s Law versus linear improvement (blue), plotted against the scenarios of doubling once every 18 months (red), every 24 months (black), and every 36 months (pink). The green area between the pink and blue lines represents the window of opportunity where linear improvement exceeds Moore’s law when the doubling interval is set to 36 months. Note that the vertical axis on this graph is log scale.

Again, the green area represents the market opportunity for linear improvement vs. Moore’s Law. In the 36-month scenario, linear improvement not only has over 8 years to go before it is lapped by Moore’s Law, there is a point at around year 2 or 3 where the optimized solution is clearly superior to Moore’s Law. In other words, there is a genuine market window for monetizing innovative solutions at a pace that small businesses can handle.

Also, as Moore’s law decelerates, there is a potential for greater standardization of platforms. While today it seems ridiculous to create a standard tablet or mobile phone chassis with interchangeable components, this becomes a reasonable proposition when components stop shrinking and changing so much. As technology decelerates, there will be a convergence between that which is found in mobile phones, and that which is found in embedded CPU modules (such as the Arduino). The creation of stable, performance-competitive open platforms will be enabling for small businesses. Of course, a small business can still choose to be closed, but by doing so it must create a vertical set of proprietary infrastructure, and the dilution of focus to implement such a stack could be disadvantageous.

In the post-Moore’s law future, FPGAs may find themselves performing respectably to their hard-wired CPU kin, for at least two reasons: the flexible yet regular structure of an FPGA may lend it a longer scaling curve, in part due to the FPGA’s ability to reconfigure circuits around small-scale fluctuations in fabrication tolerances, and because the extra effort to optimize code for hardware acceleration will amortize more favorably as CPU performance scaling increasingly relies upon difficult techniques such as massive parallelism. After all, today’s massively multicore CPU architectures are starting to look a lot like the coarse-grain FPGA architectures proposed in academic circles in the mid to late 90’s. An equalization of FPGA to CPU performance should greatly facilitate the penetration of open hardware at a very deep level.

There will be a rise in repair culture as technology becomes less disposable and more permanent. Replacing worn out computer parts five years from their purchase date won’t seem so silly when the replacement part has virtually the same specifications and price as the old part. This rise in repair culture will create a demand for schematics and spare parts that in turn facilitates the growth of open ecosystems and small businesses.

Personally, I’m looking forward to the return of artisan engineering, where elegance, optimization and balance are valued over feature creep, and where I can use the same tool for a decade and not be viewed as an anachronism (most people laugh when they hear my email client is still Eudora 7).

The deceleration of Moore’s Law is already showing its impact on markets that are not as sensitive to performance. Consider the rise of the Arduino platform. The Arduino took several years to gain popularity, with virtually the same hardware at its core since 2005. Fortunately, the demands of Arduino’s primary market (physical computing, education, and embedded control applications) has not grown and thus the platform can be very stable. This stability in turn has enabled Arduino to grow deep roots in a thriving user community with open and interoperable standards.

Another example is the Shanzhai phenomenon in China; in a nutshell, the Shanzhai are typically small businesses, and they rely upon an ecosystem of “shared” blueprints. The Shanzhai are masters at building low-end “feature phones”. The market for feature phones is largely insensitive to improvements in CPU technology; you don’t need a GHz-class CPU to drive the simple UIs found on feature phones. Thus, the same core chipset can be re-used for years with little adverse impact on demand or competitiveness of the final product. This platform stability has afforded these small, agile innovators the time to learn the platform thoroughly, and to recover this investment by creating riff after riff on the same theme. Often times, the results are astonishingly innovative, yet accomplished on a shoe string budget. Initially, the Shanzhai were viewed simply as copycatters; but thanks to the relative stability of the feature phone platform, they have learned their tools well and are now pumping out novel and creative works.

The scene is set for the open hardware ecosystem to blossom over the next couple of decades, with some hard work and a bit of luck. The inevitable slowdown of Moore’s Law may spell trouble for today’s technology giants, but it also creates an opportunity for the fledgling open hardware movement to grow roots and be the start of something potentially very big. In order to seize this opportunity, today’s open hardware pioneers will need to set the stage by creating a culture of permissive standards and customs that can scale into the future.

I look forward to being a part of open hardware’s bright future.

Great post! One kibble though… You say, “Replacing worn out computer parts five years from their purchase date won’t seem so silly when the replacement part has virtually the same specifications and price as the old part.”

I’m not sure I buy that. Microwaves plateaued at least a decade ago. The one I buy today has the same interface and heats water just as fast as the one I bought ten years ago, and is not far off in price. Alas, the rise in repair culture has not materialized.

My guess is that they’re just too cheap to make. If it takes 1/2 hour to diagnose and repair a microwave, you’re probably better off just buying a new one.

I’m afraid it will be similar with the computers of the future. Like today’s cell phones, everything will be soldered down, highly integrated, and seriously unfriendly to repair. But they’ll be so cheap that nobody will care.

That said, I hope I’m wrong and you’re right. :)

It’s true that you could own a microwave that isn’t worth fixing or you could be in my situation and own one that you would seriously consider fixing due to how great of a design it is. I have a Whirlpool MT244 Talent microwave and I would love to know more about the team who designed and built it.

Of every machine and device I have ever owned my microwave has exceeded my expectations in usability and utility. Jog dial controls, large physical smack’em buttons, led display and an incredibly well thought out state machine model for the UI. When I use the office microwave with its tiny membrane buttons and washed out lcd display and a bare basic firmware that won’t let me extend the timer on the fly or adjust power levels mid cook – it drives home the point, Engineering is all it takes to make the difference between something treasured or a throw away item.

So my microwave says Made in Sweden but I really would like to know a lot more about it. I think it would be great if devices came with a list of credits and there was an IMDB type site to track that kind of thing. What’s exciting about the open hardware ecosystem that Bunnie mentions here is that open hardware might lead us to a future where the best engineered products become ubiquitous. That would be a great outcome.

something similar to http://mbandf.com

The original owner of my first home was a tugboat captain, and he had an honest-to-goodness ship’s galley designer build him an unbelievably useable yet remarkably tiny kitchen.

The microwave was built right into the custom, round-cornered cherry cabinetry. No way in hell was I going to replace it when it broke – it needed fixed!

After dissassembly I found complete schematics and parts ordering information tucked inside the device. I debugged it in an hour with a cheap voltmeter, ordered a replacement component, and it’s working today – ten years later.

Artisan microwave? I dunno. Unlike computers, most appliances are built to be fixed by people who haven’t attended any specialized training courses.

Appliances (like the microwave you describe and the $30 one you can get at walm….) are required to have schematics supplied with them…as are dishwashers, clothswashers, dryers, etc….

Computers probably don’t because the designs change so often… however, if you know your chipset/etc… you can usually dig up the data-sheets and get a reference design… (some are proprietary though and unavailable to general public)… as designs and methodologies stabilize though… we should start seeing them included, or more likely… available freely on the internet… now.. finding a motherboard that is designed to last a long time… that is more difficult… especially with the lead-free solder they make us use now!

[…] Huang (of Chumby fame) gave a great talk on the end of Moore’s Law and what he sees as the future of open hardware. Moore’s law drives a very short market cycle […]

[…] which can succeed in the marketplace. He’s put all this together into an equally excellent blog post: Currently, open hardware is a niche industry. In this post, I highlight the trends that have […]

Open Hardware. Well let me tell you this. Recently we were purchasing around 25 machines and 2 servers for the company. The total cost was around $18000. We decided to go for open hardware where we customised and built everything ourselves and cost was around $15000. We saved $3000 in the process and it was a learning experience. Sooner hardware companies have to offer more bang to their buck or its gonna be a open hardware everywhere.

The reason most people go for branded machines is due to well tested, all kinks ironed out, nature of their designs. The extra stability this brings to a business critical environment is enormous.

But the way they skimp on testing and cut corners in fixing issues, these days, it won’t be too long before self-assembled machines are seen as equally stable. Suddenly the brands will find themselves looking for reasons to justify their higher prices. The reputation built up over many years would have slipped away and they won’t be able to get it back overnight.

Perhaps it isn’t quite the same, but all the computers I have built have had far fewer problems, and those problems easier to diagnose (because I built it, I knew where the potential glitches were) than those pre-built systems I am asked to look after. Give me the “artisan” route any time* – no Apples for me.

*OK, my laptop is pre-built like all the others, but I use an eight-year-old ThinkPad X40, because it is fixable (and yes, I do know about the crappy HDD – I’m ready to put a SSD in when it quits!)

It’s great to see this level of forethought going into opportunities for open hardware, and your enthusiasm is encouraging and exciting!

There is another area that must keep pace, however, if smaller companies and individual innovators are going to be able to play in the hardware space. The regulatory burden on electronics – especially wireless electronics – is currently extremely onerous. It costs thousands of dollars to attain something as simple as FCC Part 15 certification, accounting for the cost of NRTL testing, compliance reports and FCC application fees. This gets much worse when a product integrates WiFi or a mobile data network radio, as the rigor of testing increases dramatically and additional regulatory bodies must be appeased. Even using a pre-certified radio module, the cost to achieve device acceptance and endorsement for use on 3G mobile networks is over US$40K. And all of this is assuming certification is achieved on the first pass!

At least two things need to happen in the regulatory space to open up the opportunities you discuss here. First, the standards bodies need to dramatically simplify regulations, even if only for a subset of less-complex devices. Newly-competing innovators who want to stay on the right side of the law need to be able to fully understand the requirements for their devices and concretely estimate the effort and cost of compliance – without poring over the text of endless laws or buying expensive standards. Second, the cost of compliance needs to be abated. This, on the other hand, is something the community can pull together to achieve. Small-business and individual-innovator-friendly commercial laboratories could offer pro-bono chamber time to companies and individuals who open-source their hardware. Hacker spaces could assemble pre-compliance testing rigs (even if they’re just screen rooms with repurposed radio gear) for use by their members. As the movement grows, product development cooperatives could pool resources to assemble compliance-grade testing facilities, using revenue from non-member testing fees to offset maintenance, overhead and ongoing calibration costs.

There are some significant regulatory and cost barriers to commercial electronics innovation outside the walls of big companies, but as the open hardware movement grows and success is defined more by offering compelling products and less by relying on market exclusion and stables of patents, innovators will find ways to reduce these burdens…

I agree with Dave here.

But I would add a few more details you shouldn’t avoid adding to the picture when thinking on open hardware future:

* Most people don’t care if your design is open or not. They just move on what friends, media or both consider cool and tested. I am not including hackers here, they are a marginal market. A profitable large scale business for 1000’s of engineers to work worldwide will be influenced very little by OSH in consumer electronics. A financial crisis that may force people to stand still with the same TV for more than 5 years may have a way larger influence on small business (e.g. repair, recycle, etc). Think on the 60’s, the TV wasn’t lasting in the living room for 15-20 years because it was open, it was because was relatively expensive item, and repairing was reasonable investment when needed. That is not true anymore.

* Moore’s law has little or nothing to do with offering open circuit schematics. Apple II included schematics because they started like garage-hacker guys, targeting that audience, mainly because of Wozniak. Remember Apple I was a DIY kit. They had to compete with monsters like IBM and this was a good way to do it, but once they had a reasonable client base they moved away from garage-hacker fashion model forever. Apple is today the closest consumer electronics tech company in the world, and will be for a while. Still you will have a hard time convincing people that an iPhone is not the best phone out there to own.

* Even Arduino follows that trend. They built an OSH because they basically were going to lose everything as the school they were working was going to close. So with little investment they just made open what they already used for educational purposes. That has 0 risk. Now they have a good client base and a named brand, they are producing faster boards themselves, as well as shields which compete directly with others who have been investing in the last 2-3 years to produce the very same thing.

* The dream of many “entrepreneurs” is not to necessarily succeed with they product but to get the attention of a big guy who acquires it to get rich. This is not going to change anytime soon. Virtually any product that is a business success will end being part of a larger business being acquired or expanded and repeating the Apple history.

* Many components are limited to distribution by regulatory laws and cannot be used or exported as open HW. That is not going to change for whatever US laws consider dangerous, and that can be a simple oscillator beyond 3GHz. Furthermore, some companies are required you to sign NDA for accessing data due to regulations. That is not going to change either.

An last but not least, don’t forget in the big blues there are many engineers already. They are not stupid people. They have the experience and the tools to do things that a small business couldn’t do.

While you concentrated on Moore’s Law to show the mechanical side, you’ve neglected the more influential side which is corporatism and IP Law. Open systems may have a standardized platform but you know that any company like Apple or IBM or Microsoft will not allow for a standard of software or usability exist without their consent. Even if we ever do reach a ceiling for hardware, heuristics will be an entrenched sector of business profitability. Just ask those companies that tried to make knock-off Apples.

The only country right now that can thumb their nose at these companies is China. They have or can acquire the technologies and foundries to make knock-offs. And having that without a constant worry about lawsuits or enforced closures, these foundries, as you say “riff” on the platform until it becomes specialized or esoteric. Essentially the rest of the world will be hide-bound by lack of innovation because of strangulating IP law.

As always, great informative post, thanks!

By the way, did you have any talks about Marcin Jakubowski Global Village Construction Set? There was a talk at the summit about their steam engine project (by Mark Norton)?

Eh, a crazy idea: can _you_ seed an OSE charter in china? Maybe Seedstudio can spin off an Open Source Ecology group there…

En terminant: bravo pour votre Netv et vos astuces cryptographiques… brilliant!

It’s an interesting look, but I’m not buying it for two reasons:

1. You’re not taking into account the human need for new and different. The reason for increasingly rapid upgrade cycles isn’t that manufacturers keep making technological advancements and then pushing it onto unsuspecting consumers, it’s that people want new stuff, better experiences, trendier designs. Just look at the fashion world. Even if CPUs and memory aren’t advancing by leaps and bounds in performance, manufacturers will still be making constant design, usability, and feature improvements to fulfill demand (and keep themselves in business).

2. You’re assuming that things just stay on the current silicon chip process that’s been rapidly improved on for decades. This ignores the fact that there are other ways to make integrated circuits, memory, etc. There may be a lull where Moore’s law slows down temporarily, but something like organic memory, quantum computing, etc. will eventually kick things back up again.

Bad analogy. Less than five percent of the world’s humans care about the fashion world. Look around you – how many people on the street are wearing blue jeans? It’s a hundred year old design, at least.

People don’t really want fancy new stuff as much as they want reliability. It’s just that billions of dollars are being spent to convince you otherwise; “fashionable” items are for those who are functionally programmed by advertising. In the absence of such programming people trend towards function, availability and durability, not “new and different”.

when as the last time you modified a garment? How many of you have darned a sock or mended a pair of jeans lately?

I spoke with a colleague about exactly what is discussed in this article a few weeks ago (thanks for the debate Chris Jackson) and the thing that struck me most is the impact that cheaper/standardised components will have on the turn over of products. Clothing has never been cheaper than it is today. Technology (and labour abuses) has enabled it to be produced faster and for less. we dont NEED more clothes but that doesn’t seem to matter, everyone still buys more of them, more often and for less. Clothing is modified by some, made from scratch by some, but the vast majority of people just buy clothes right off the rack and discard them when they get bored or they quickly wear out due to being made so badly. The example of the jeans would work but only if we were still wearing the exact same pair of jeans (or at least made partly of the exact material) as those made 100 years ago, but we all know that we aren’t. Just because the basic design idea of jeans hasn’t changed in 100 years does not mean that the jeans you see worn today are the same as the originals. Small, incremental changes over time to the design details of jeans mean we have had “Mom Jeans”, James Dean Jeans, Hippy jeans, Hipster jeans etc. There are many different versions of jeans and they all identify us as a “kind” of person, they are a very effective signifier. The result has been huge amounts of individuality and very effective communication but with waste, massive labour abuses and environmental destruction.

The funny thing about fashion is that is often held as an example to other industries of how an open industry can be successful. It is almost impossible to protect a fashion design, and things change so fast its not worth the effort anyway, so fashion could be seen as the ultimate Creative Commons. The skills, techniques and equipment needed for consumers to make/modify clothing is readily available and cheap. But almost no-one does it themselves anymore (a stark change from 40/50 years ago) and so the ready-to-wear fashion/clothing industry is extremely profitable. How? By selling lots of stuff really cheaply to many many people, and convincing them that they NEED that new thing, even if they really don’t.

I fear for what it might mean if other industries operate at the speeds that fashion does, because even though the option to alter clothing has always been available, clothing has now become so cheap to buy there is little point. A small minority of people like yourselves might use cheaper standardized components as an opportunity to modify and create, but the vast majority of the industry and consumers probably will just buy it “off the rack” and discard it when bored or feel like they need a purple geometric themed phone instead of a grey is the new black one.

Quite the opposite. Most people care about fashion. You may not care about your Jeans, but you do care about your phone, or your tablet, or your OS.

What is the electronic gadget that you have had for more than 5 years lately? Even if you keep them for long, how many of your friends do?

A 5 years old cell phone is weird, most people won’t keep it for that long.

I don’t agree. You should say: a small portion of people in a rich position care about fashion, phones, tablets, OS. Because they have ressources (time and money) to do so.

Most people on earth don’t have ressources.

They care about food & water.

Once they have, they care about having a roof.

Then, they care about having clothes.

Then they care about communication (getting acess to phone and internet proportionnaly expensive when you earn very low) and transport, education.

You may nor realize it, but now we already have over 90% of the 7 billion people on earth. For the remaining 10% or less, your comments applies.

llhagan, hi,

charlie answered the “fashion” side, so i’ll answer the other aspect. the advances being made on improvements in geometries can impact in one of two ways: speed or power. however, there’s a limit on designs of the IC state-machines which often hampers the ASIC designers in their attempt to maximise speed. Ingenic, for example, have not pushed THEIR OWN X-Burst Vector Unit up to 1ghz, which they were happy to position as a 3D Graphics Accelerator, but are happy to push it as a 500mhz “Video” Accelerator, for their new 1ghz jz4770.

why do you think that is?

it’s because the 8-stage pipeline, when pushed up to 1ghz, turned out to not be long enough. Ingenic hit a brick wall that required them to completely redesign their Unique Selling Point flagship co-processor. rather than do that, they bought an off-the-shelf 3D GPU Engine from Vivante.

however – just because they could not achieve 1ghz for the X-Burst DOES NOT mean that they could not take advantage of the power efficiency offered by 65nm.

thus, if you were to plot their CPU on the Koomey’s Law Graph, i think you’ll find that it fits – as does the ARM Cortex A9 (at 1e17 in 2011).

the point is that whilst the *speed* of CPUs is slowing down (because everybody’s hitting a pipeline-based “Brick Wall” in the design of CPUs), the gains in efficiency due to reduced geometries are *not* slowing down – they are doubling at a fixed rate.

http://www.koomey.com/post/2678649528

Actually, I completely agree with your assessment that design/fashion/status will become a dominant driver of new sales over simple performance improvements. If you look at the auto market, things haven’t changed substantially there in years, yet people buy new cars once every few years. This is partially due to replacement due to wear-out, but a large driver of new car purchases is also the desire to upgrade or to show social status. Apple’s i-series of products certainly play to this human instinct to flaunt status, and there will be more like it to come.

However, I think the good news is that high-tech will be somewhere in between the apparel fashion industry and the auto industry. While there is a thriving market for custom automobiles, it’s uncommon that a small shop can build one from scratch: even the auto tweakers typically start with a pre-built chassis and engine platform. This is because it’s not economical to tool up small-scale production for engines and chassis. On the other hand, in apparel it’s easy to go from runway models to sales since there is no tooling, and the premiums for top brands is high enough to afford the manual labor to hand-craft the apparel.

Electronics will probably be somewhere in the middle. As technology stabilizes, people will feel more comfortable spending a large amount of money on status electronics, because they can be kept, used, and resold for several years without fear of utter obsolescence. This enhanced durability creates a real secondary market for gadgets which converts the gadget market from a value-constrained disposable purchase into a potential investment with real resale value.

The higher margins afforded by high-design will create the space for small but innovative shops to survive. Indeed, people will still want to replace their status-gadgets every couple of years, but the design and manufacture of status gadgets will no longer be relegated to Fortune 500 companies (and somehow, Vertu). Common computing platforms will ensure adequate functionality and performance without a huge R&D investment; software of course is malleable and can be updated well after the point of sale, and over time F/OSS tends to get quite good; and tooling NRE can be amortized within the sale of hundreds to thousands of units. I think these factors favor the rise of small shops to compete with large corporations in meeting the fickle demands of consumers.

[…] ‘open’ of the proprietary hardware platforms around – or an Arduino.Huang’s full blog post on the subject is well worth a read if you’re at all interested in the open hardware […]

As a devotee of the anti-Microsoft culture, I am looking forward to the day when faster computers don’t show up every couple of years and automatically force people into the latest version of Windows. And it is slowing down already. I have a four year old laptop (running Ubuntu Linux, thank you very much) and it’s running just fine; there is no need to even think about an upgrade. Back in the 1990’s a four year old computer would have been practically unusable. As the hardware upgrade treadmill continues to slow down, opportunities will abound for software authors to get creative with efficiency.

How will the push for trusted computing modules affect this?

Won’t every piece of software and hardware be signed?

I feel “open” is going to be sacrificed for cheap and easily replaceable.

[…] via Why the Best Days of Open Hardware are Yet to Come « bunnie’s blog. […]

funny… i was just writing up a post to the http://openhardwaresummit.org mailing list about a way to accelerate the process by which enthusiasts can work with the latest mass-produced embedded hardware.

the initiative, which has a specification here – http://elinux.org/Embedded_Open_Modular_Architecture/PCMCIA – is based around the fact that, just as mentioned above, the development of processor “speeds” is slowing down. this funnily enough allows so-called “embedded” processors to catch up, and it’s these embedded CPUs which are low-power enough to base an entire computer around that is still desirable yet consumes between 0.5 and 3 watts instead of 10 to 500 watts.

if anybody would like to participate in this initiative, please do join the arm-netbook mailing list – http://lists.phcomp.co.uk/mailman/listinfo/arm-netbook

Bunnie, I saw your talk at the OHS but I’m very glad you’ve posted this because the whole summit was kind of overwhelming for me. Your insight is most appreciated and I look forward to seeing how things turn out in the next decade or two.

Thanks

Dan

sorry, i posted the EOMA initiative but then i remembered something very very important: there was an article on slashdot recently that pointed to an alternative to “Moore’s Law”, which showed a fascinating graph, plotting the log of “Performance per Watt” vs time, and, whilst Moore’s Law is slowing down, “Energy Efficiency” most definitely is not.

let me see if i can find it… yep! google “moore’s law site:slashdot.org” found it immediately:

http://hardware.slashdot.org/story/11/09/13/2148202/whither-moores-law-introducing-koomeys-law

so in that context, mr buh-bunnie’s buhlogghh is shown to be rather… insightful.

What a load of crap – thanks for comparing apples & oranges – it’s apparent you sold your soul to socialism years ago.

Moving right along, Moore refers to transistors on a chipset – not the silly assembly code bits you are referring to – bottom line: nobody gives a crap about your masters in CS or Assemply Langauge programming – it will all be obfuscated by the cloud so if you could please pass the pipe, it’s quite obvious you’re smokiing something.

Open Hardware is such a joke – just look at Cisco UCS! If you think the private sector is going to expose their R&D in the name of better code then you were truly Stanford & NOT Ivy League. BTW, when you are finished with your assembly auto felatio, please feel free to join us in the cloud where the chipset is a commodity – CHEERS!

Sorry, no clouds today here, the sky is blue. Don’t know in which cloud you hide.

I think open hardware is interesting for small, even for middle businesses. Flexibility and common IPs are a strength often overlooked. Small companies get successful on open HW/SW, and this trend accelerates. It simply scales for small companies !

Even majors are now using more and more FLOSS !

example : I work for an automotive electronics supplier ( > 160 000 employees). I was very surprised that more and more car ECUs are now switching to a GNU/linux base ! Can you believe that ?? Previously i thought that Linux and other FLOSS IPs can not be used in the automotive industry because of safety concerns, dev control, support, whatever. We used only proprietary code. Now we simply have realized that Linux is more flexible, stable, costs less, and that pro support is widely available…

Of course, open HW will not gain momentum as fast. But the ongoing trend to standardization of HW will definitely move the expertise from the board developers to silicon makers. The board making will then become “available source”, for many reasons, including repair. Today, schematics of cell phones are widely available (most times not officially), simply because there is much less value and know how in them. This makes repairing an option!

Joe,

Wow that was very positive & constructive feedback that you provided & many interesting & salient talking points. I want you to look in the mirror & know that you have never had an original thought or idea in your petty half life other than to troll blogs.

I feel at this juncture it would be appropriate for you to remove yourself from existence by any & all means necessary & you contribute nothing other than expelling foul scented carbon dioxide.

Thank you for your time Joe. Please implement your timely end post haste.

Sincerly,

God.

Interesting read.

Its almost like a plot taken from ‘The twilight Zone’

nanananananna

[…] Why the Best Days of Open Hardware are Yet to Come « bunnie’s blog. Like this:LikeBe the first to like this post. […]

Nice post Huang and thanks for taking the risk of looking into the future and trying to predict it.

I don’t know if the future for OSHW is going to be what you envision, and if the rationale makes any sense.

What is certain is that OSHW is moved by people who loves making things, understanding what is going on, and sharing it. A key piece for all of that have been the drastic reduction in price from many components, thanks to consumer electronics mass market. And then Internet and the easy way to share knowledge we have today.

So rather than a future in which OSHW grows because of large guys gets into trouble and the mass market stand still, I envision a growing OSHW community actually thanks to the mass market. The huge cell phone industry and entertainment devices are the ones which helped us to get an accelerometer by the current price tag. People who loves electronics and sharing knowledge are not necessary motivated by business success but by going to the bottom of things.

That changed for the better and is not going to stop.

I’m sure physical limits have much to do with the slow down of cpu improvements. But that same “physical limits” argument has been used to predict the imminent demise of Moore’s law ever since its inception, and we’ve always found ways around it.

I think a lot of the slow-down has also to do with the fact that computers have finally become fast enough for most practical purposes. Sure, I’d like my web page to load a few miliseconds faster, but a two-fold improvement in speed won’t be nearly as important to the user as it was even five years ago. Video games – another big driver for cpu improvements for the past several decades – are now fast and realistic enough that the game play and quality of the art matters far more than hardware performance.

I remember having to wait a minute for even a gray-background html page to load on NCSA Mosaic. Or having to wait half an hour for a single frame of a simple 3D object to render. There’s a huge difference in user experience when you scale from hours to minutes, and minutes to seconds, and seconds to milliseconds. Beyond that? Not so much.

Interesting post, it will be interesting to see how things progress.

One thing about Moore’s Law.

Device counts double every 18 months for the same price.

An other way of stating it is

Prices drop in half for the same number of devices every 18 months.

Even if device counts per die do not increase the restatement could still be true by developing lower cost Fab equipmentor once the cost of Fabs are completely amortized.

New Fab designs which take advantage of FPGA only chips might be possible. 2D light interference patterns could be used to create only regular patterns which then most be programmed.

Just my USD 0.02 worth.

[…] Why the Best Days of Open Hardware are Yet to Come « bunnie’s blog This piece about the implications for small inventors of a slowdown in Moore’s law is truly insightful […]

Instead of “5 nm is about the space between 100 silicon atoms”, in fact 10 atoms was meant, right? Lattice spacing for silicon is 0.5430710 nm [1].

1. https://secure.wikimedia.org/wikipedia/en/wiki/Silicon#cite_ref-21

You are correct. I had read that as 0.5 Angstrom, but it is actually 0.5 nm. Fixed now.

Interesting post. However I highly doubt that the slowing down of Moore’s law will last long enough for the shift in industry described to happen. It is true that we are hitting a technology wall but that is until a new material/way of computing is discovered (or rather adequately developed) to revolutionize the way things are. Graphene, quantum computing, optoelectronics, memristors are all promising technologies that will help boost performance even further. It’s already hard keeping track with all the new research being done everywhere.

Another point is the fact that the primary demand for performance comes from applications and software. There may come a time when more performance for the average consumer will simply not be needed because it won’t mean better user experience. We’re gonna need a new “killer app” to use all that extra power and do something cool.

Or maybe in the far far future, when we will be getting close to the boundaries of human perception, it would simply not make sense because we would have hit the (larger) wall of human biology. We have already finished the color race (noone sees more colors than 32bit TrueColor can produce) and the photo-realistic graphics race is only a matter of time.

Hi,

Slightly off-topic question, but related to the mention of the growing importance of

fpgas’s – I was wondering does anyone on this thread have any recommendations

for a good starter fpga development board/system ?

In my day job I’m a pure embedded SW guy but I’d like to at least take some baby

steps into the more HW side of things and fpga’s seem like a fascinating way to do

that (I’ve also just finished re-reading ‘Behind Deep Blue’ and have gotten inspired

by custom HW circuits again :)

Cost is unfortunately an issue, so cheap yet powerful would be great ;), and I’d

like a system where the tool chain is as opensource as possible.

Any HW recommendations or pointers to reading material would be much

appreciated.

Thanks !

Kemo

Manufacturers everywhere worked out a long time ago that making things that last is not in their economic interests. A lot of engineering goes into designing components that will fail shortly after the warranty expires.

Humans need to get over the delusion of longevity of assets. Everything we buy today is a consumable .. even the ones that don’t look like one. For example, last time my dishwasher broke I called in a repair man who charged me half the cost of buying a whole new one … and only to have the machine break down again with a different problem within a year.

“Quality” is now a bad word in manufacturing. It means less repeat business. Even if your artificially-constructed graphs [using slightly different equations which still keep the essence of Moore’s law in place, I can show you graphs that never have any intersection] illustrate a widening window of opportunity of using non-Moore components, manufacturers will ensure the era of repair is long behind us.

@jj olla: I can see sort of where you are coming from, but only for certain bands of the market. There are still companies in any sector that major on reliability – for instance, I drive a 17-year-old Subaru, run an eight-year-old laptop, a five-year-old desktop. I had the same mobile for four years, and that was second-hand, and still have it as a backup – the only reason I changed it was because the new smartphones had evolved to the point that I could see a point in buying one (shame I went for Nokia, which is quietly imploding!).

Your dishwasher may have been from the lower end of the market, where throughput is important. If you can afford a bit more, buy from a better company next time – it will probably pay off.

>> “Quality” is now a bad word in manufacturing. It means less repeat business.

That is the situation today, due to a momentary (20 years ?) situation which combines ultra-cheap labor for prod with ultra-cheap materials, and expensive labor for repair.

This situation is quickly evolving. Labor costs sink in rich countries, and rises very quickly in cheap countries. They equalize. Yes, you will earn the same than an average chinese guy within 10 years!

Repair becomes an option again !

Furthermore, material resources become scarce at an astonishing speed.

Gold for IC bonds? expensive, rising.

Energy ? expensive, rising.

Rare earths, copper, oil (for plastics) ? you guess it…

Costs are truly exploding, for production materials and labor, even in China !

Don’t expect to find such a cheap dishwasher in 10 years.

Don’t expect to be able to afford as many gadgets, cars, etc in 10 years.

Don’t expect to find such bad products as standard in 10 years, because the gap between a good and a bad product will reduce drastically. People will buy less shitty products.

That’s my view.

jj olla you’re short sghted.

sorry but it’s true if there is one thing that has aways been true in life it’s “nothing last forever”. be it a species, mounts or even suns everything has it’s time then dies away and the same goes for the age of large manufacturers as well. the limited resources of our planet will push these fast trun over companies out of the market in favor of small high quality companies.

it’s a cycle as old as time.

Hardware is fundamentally different from software. Anyone can download some code and compile and run it in minutes, for only the cost of the hosting of the project and the Internet traffic. Anyone, with just a little programming experience can contribute something to the code. That’s perfect conditions for growing an open culture, because anyone can participate.

Hardware on the other hand has longer lead times and higher setup costs, even if the price per unit can be squashed with high volumes. This is especially true if custom silicon is involved. Sure, you can etch PCBs for fun in your garage, but that’s not how the next smartphone is going to be built. And you can forget setting up a nm silicon manufacturing process in your garage. :)

So, what about FPGAs? Sure, they’re a way to reduce the barrier for developing ASICs, but they won’t do much towards amateur development of complex electronics. You still need the long development cycles, and for that reason it suits commercial players better. And with the time and money invested, I don’t see what said commercial players would have to gain from opening their designs.

In short: Linux and Firefox are examples of highly evolved open source software that is commercially viable. I don’t see the Linux or Firefox of open hardware (in terms of complexity, distribution and commercial viability) surfacing anytime soon.

“And you can forget setting up a nm silicon manufacturing process in your garage. :)”

don’t be so sure of that.

5 years ago you would not believe you could own a 3D printer at home now there homemade and work as well if not better then the high priced ones. its not a question of IF but WHEN the day will come when you can do NM scale manufacturing if not at home then at a local low cost maker.

and it will happen alot quicker then you’d think.

[…] don’t think there’s a video of the talk, but you can read his blog post summarizing the talk or download bunnie’s OHWS slide […]

[…] don’t think there’s a video of the talk, but you can read his blog post summarizing the talk or download bunnie’s OHWS slide […]

[…] Why the Best Days of Open Hardware are Yet to Come […]

[…] Why the Best Days of Open Hardware Are Yet To Come (Bunnie Huang) — as Moore’s law decelerates, there is a potential for greater standardization of platforms. A provocative picture of life in a world where Moore’s Law is breaking up. A must-read. […]

[…] Very enlightening. […]

[…] comments was one from Michael Hay (HDS) which pointed to another blog post by Andrew Huang in his bunnie’s blog (Why the best days of open hardware are ahead) where he has an almost brillant discussion on how Moore’s law will eventually peter out […]

It seems to me that “open hardware’s bright future” is pretty dark for everybody else. Are you really hoping that technological progress and innovation will stop and people will live in extreme poverty?

[…] don’t think there’s a video of the talk, but you can read his blog post summarizing the talk or download bunnie’s OHWS slide […]

[…] any other computer.For an optimistic vision of the future of homebrew hardware, you might enjoy http://www.bunniestudios.com/blo…This answer .Please specify the necessary improvements. Edit Link Text Show answer summary […]

[…] a detailed (but not too technical) look at Moore’s Law and the future of open hardware, including the fascinating idea of “heirloom laptops,” which are representative of a […]

[…] talk was great and he’s followed it up with an equally good blog post which I suggest you read.Huang was talking about the future of the open source hardware movement, […]

An intresting read. Certianly in the past things did indeed come with circuit diagrams. Even my old Commodore 64 and (later) a 1084S Commodore monitor came with those! These devices could be repaired.

I think the biggest problem though is the sheer physical problem of trying to repair any sort of modern-day surface mount technology. The components are so small and tiny they need special equipment to repair and even then it isn’t easy. Certianly joe bloggs with a ’70s soldering iorn would be out.

In that event I guess there are two other ideas which could be tried – one, make technology more reliable (so it dosen’t break in 6 months) and two, make it modular. Just think about (say) a large HD TV today; if it breaks you have to bin an entire TV – wouldn’t it be good if you could open it up and just change one part? Though today we aren’t even allowed to do that, opening up a piece of electronics often ends your warranty, for example.

I also wonder if the new technology of graphene will change things? Apparently that technology is said to carry on making things smaller and faster.

Though I did think for a moment even if that is possible in the future what about other thigns? There could be more to this than meets the eye. What about resources for example — you could make a CPU twice the speed of an existing one which hardly takes any power with new technology but if you haven’t got (say) any resources (e.g. oil) to put in your HGVs and actually transport things to people and shops, it’s gonna be a dead end!

Open hardware IHMO though can’t come quick enough. Even with existing setups it isn’t free of problems; for example look at some of the small arm systems you can buy, some still have propietary parts (e.g. a video display will still be propietary, for example).

ljones

ljones

[…] interlinked and that more of the bridging of the two is needed. We’re just waiting for the Moore’s Law treadmill to slow down enough for the two to sync up […]

[…] Why the Best Days of Open Hardware are Yet to Come « bunnie’s blog. Share this:TwitterFacebookLike this:LikeBe the first to like this post. This entry was posted in Uncategorized by ivorblognow. Bookmark the permalink. […]

[…] are interlinked and that more of the bridging of the two is needed.We’re just waiting for the Moore’s Law treadmill to slow down enough for the two to sync up again.Inspired by FireflyLaura Walker Hudson shares a […]

Hi Andrew,

I’m a Chinese web developer and really interested in open source hardware movement, I like your article, so I translated it into Chinese, and put it on my blog, here is the url: http://140041.t89.cn/2011/10/24/why-the-best-days-of-open-hardware-are-yet-to-come-cn-translate/, hope your article can inspire more Chinese engineers, thanks!

[…] not only continuing, but accelerating. While Moore's law is not producing material advances in computing power, sensors that produce data in more computer ready formats such as 2.5D rangers reduces the need for […]

I don’t buy it because it doesn’t take into consideration a major factor: the rise of commons-based peer production.

The author assumes that in the near future design, production, and distribution will be organized in the same fashion. Evidence shows that these processes are undergoing profound transformations. Entities like SENSORICA http://www.sensorica.co are emerging. These are open, decentralized, and self-organizing value networks. They can be small or very large. They are very well adapted for dynamic environments. In fact, these new economical entities thriving on open innovation, which put the individual first, will have an advantage other large corporations in the high-speed development stage of a given technology. In other words, these new entities are well adapted for high speed innovation, and this is the primary reason, in my view, for which large corporations will suffer greatly within the next 5-10 years.

The world is changing in a fundamental way. It is a mistake to assume that design, production, and distribution will look the same in the near future. One cannot extrapolate corporate structures too far into the future, for they might soon go extinct pretty soon.

excellent article on open hardware!

First off I want to thank Caleb for doing this, I’m unsure wether its related to an email I sent them the other day. Now I will explain why I treat you guys with such contempt.

Does Practice Fusion have the capacity to integrate to different types of EMR into a single EHR? For example Intergy by Sage and Titanium schedule.