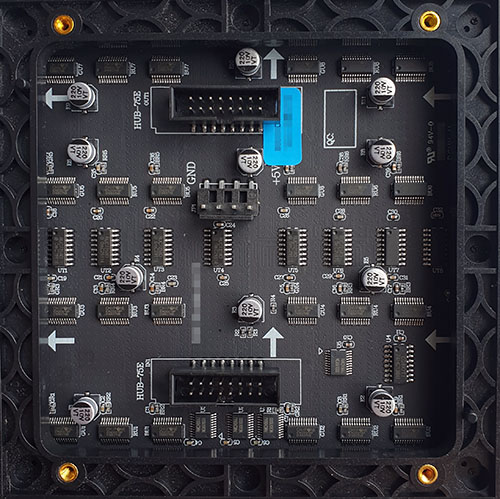

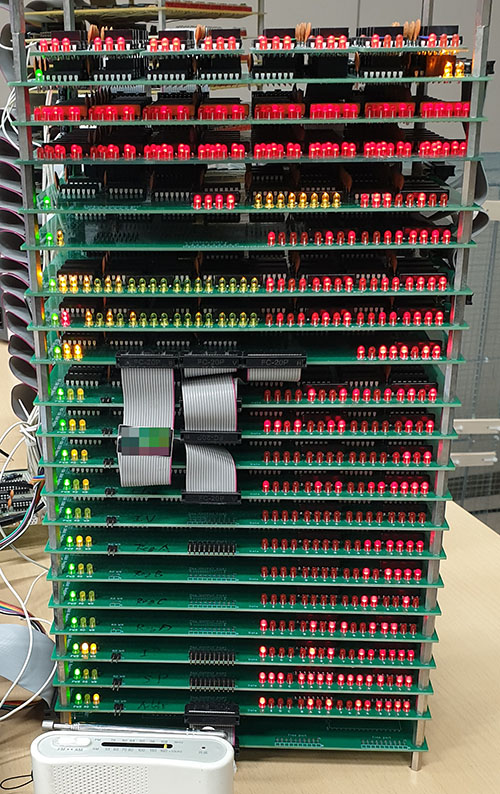

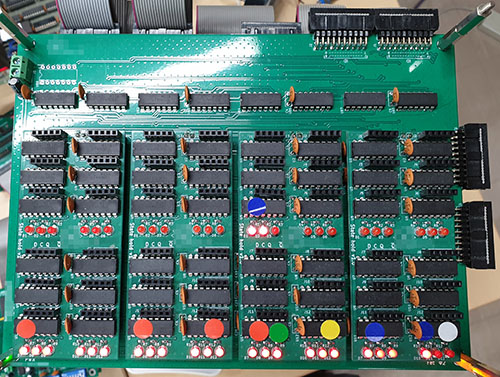

The ware for December 2024 is a 2mm pitch, 64×64 LED panel purchased from Evershine Opto Limited. Their sales part number is ES-P2-I, but the silkscreen says DCHY-P2-6464-1515-VP. The seller is just the name slapped on the box; like most commodity wares, there’s likely multiple channels offering the exact same make and model. So, I’ll accept any generic that more or less matches the spec as the winner.

As a matter of the “spirit” of the competition, my goal is to encourage thoughtful analysis, rather than “first to post”, so I will tend to award more insightful or accurate answers over quick answers. It’s a balance between rewarding “open discussion” versus “first to post”: I don’t want things to devolve into either a situation where nobody shares ideas out of fear that someone might riff off of their thoughts and win the prize; but I also don’t want a situation where folks are just throwing partial guesses at the wall to claim priority.

That being said, I had observed this would be an easy ware, which indicates priority to more insightful answers, not the first correct answer. To that end while “h” got the quickie out, Joe did produce a link to an exact match on the ware (which, significantly, is not what I get when I do an image search for the ware, although perhaps the results are geolocated). Meanwhile, Ian commented on some actual design features – an insight you wouldn’t get out of a rote image search.

In my mind, all three could be eligible for the prize in this case. I think if “h” had looped back and commented on some other design feature of the ware (doesn’t have to be lengthy – similar to Ian’s is sufficient), it would have been straightforward to give the prize to “h”. But because the ware was so easy to guess, the prize goes to Joe for producing an exact link (the part number, pixelated in the contest image, is an exact match!), as that took a little bit more research. Congrats, email me for your prize!

Tough one to judge, though; I suppose I asked for that by posting a relatively easy ware.