I’d like to share a project I’m working on that could have an impact on your future freedoms in the digital age. It’s an open video development board I call NeTV2.

The Motivation

It’s related to a lawsuit I’ve filed with the help of the EFF against the US government to reform Section 1201 of the DMCA. Currently, Section 1201 imbues media cartels with nearly unchecked power to prevent us from innovating and expressing ourselves, thus restricting our right to free speech.

Have you ever noticed how smart TVs seem pretty dumb compared to our phones? It’s because Section 1201 enables a small cartel of stakeholders to pick and choose who gets to process video. So, for example, anyone is allowed to write a translation app for their smartphone that does real-time video translation of text. However, it’s potentially unlawful to build a box, even in the privacy of my own home, that implements the same thing over the HDCP-encrypted video feeds that go from my set top box to my TV screen.

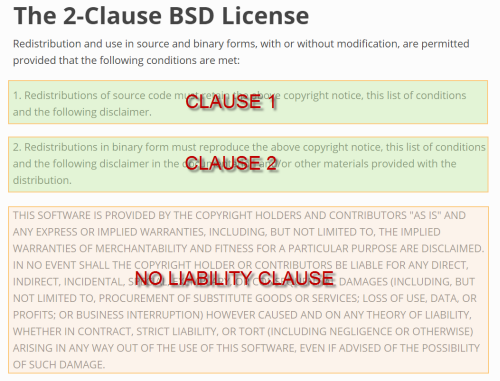

This is due to a quirk of the DMCA that makes it unlawful for most citizens to bypass encryption – even for lawful free-speech activities, such as self-expression and innovation. Significantly, since the founding of the United States, it’s been unlawful to make copies of copyrighted work, and I believe the already stiff penalties for violating copyright law offer sufficient protection from piracy and theft.

However, in 1998 a group of lobbyists managed to convince Congress that the digital millennium presented an existential threat to copyright holders, and thus stiffer penalties were needed for the mere act of bypassing encryption, no matter the reason. These penalties are in addition to the existing penalties written into copyright law. By passing this law, Congress effectively turned bypassing encryption into a form of pre-crime, empowering copyright holders to be the sole judge, jury and executioner of what your intentions might have been. Thus, even if you were to bypass encryption solely for lawful purposes, such as processing video to translate text, the copyright holder nonetheless has the power to prosecute you for the “pre-crimes” that could follow from bypassing their encryption scheme. In this way, Section 1201 of the DMCA effectively gives corporations the power to license when and how you express yourself where encryption is involved.

I believe unchecked power to license freedom of expression should not be trusted to corporate interests. Encryption is important for privacy and security, and is winding its way into every corner of our life. It’s fundamentally a good thing, but we need to make sure that corporations can’t abuse Section 1201 to also control every corner of our life. In our digital age, the very canvas upon which we paint our thoughts can be access-controlled with cryptography, and we need the absolute right to paint our thoughts freely and share them broadly if we are to continue to live in a free and just society. Significantly, this does not diminish the power of copyrights one bit – this lawsuit simply aims to limit the expansive “pre-crime” powers granted to license holders, that is all.

Of course, even though the lawsuit is in progress, corporations still have the right to go after developers like you and me for the notional pre-crimes associated with bypassing encryption. However, one significant objection lodged by opponents of our lawsuit is that “no other users have specified how they are adversely affected by HDCP in their ability to make specific noninfringing use of protected content … [bunnie] has failed to demonstrate … how “users ‘are, or are likely to be,’ adversely affected by the prohibition on circumventing HDCP.” This is, of course, a Catch-22, because how can you build a user base to demonstrate the need for freedoms when the mere act of trying to build that user base could be a crime in itself? No investor would touch a product that could be potentially unlawful.

Thankfully, it’s 2018 and we have crowd funding, so I’m launching a crowd funding campaign for the NeTV2, in the hopes of rallying like-minded developers, dreamers, users, and enthusiasts to help build the case that a small but important group of people can and would do more, if only we had the right to do so. As limited by the prevailing law, the NeTV2 can only process unencrypted video and perform encryption-only operations like video overlays through a trick I call “NeTV mode”. However, it’s my hope this is a sufficient platform to stir the imagination of developers and users, so that together we can paint a vibrant picture of what a future looks like should we have the right to express our ideas using otherwise controlled paints on otherwise denied canvases.

Some of the things you might be able to do with the NeTV2, if you only had the right to do it…

The Hardware

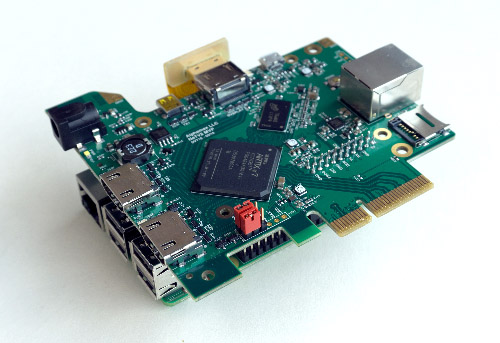

The heart of the NeTV2 is an FPGA-based video development board in a PCIe 2.0 x4 card form factor. The board supports up to two video inputs and two video outputs at 1080p60, coupled to a Xilinx XC7A35T FPGA, along with 512 MiB of DDR3 memory humming along at a peak bandwidth of 25.6 Gbps. It also features some nice touches for debugging including a JTAG/UART header made to plug directly into a Raspberry Pi, and a 10/100 Ethernet port wired directly to the FPGA for Etherbone support. For intrepid hackers, the reserved/JTAG pins on the PCI-express header are all wired to the FPGA, and microSD and USB headers are provisioned but not specifically supported in any mode. And of course, the entire PCB design is open source under the CERN OHL license.

The NeTV2 board as mounted on a Raspberry Pi

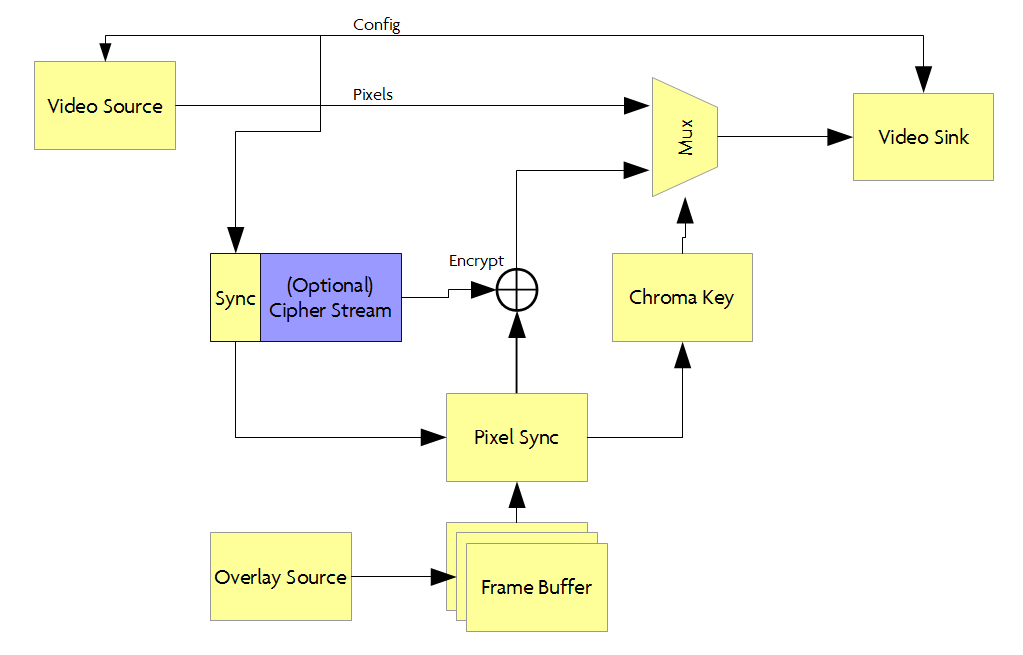

The design targets two major use scenarios which I refer to as “NeTV classic” mode (video overlays with encryption) and “Libre” mode (deep video processing, but limited to unencrypted feeds due to Section 1201).

In NeTV classic mode, the board is paired with a Raspberry Pi, which serves as the source for chroma key overlay video, typically rendered by a browser running in full-screen mode. The Raspberry Pi’s unencrypted HDMI video output is fed into the NeTV2 and sampled into a frame buffer, which is “genlocked” (e.g. timing synchronized) to a video feed that’s just passing through the FPGA via another pair of HDMI input/outputs. The NeTV2 has special circuits to help observe and synchronize with cryptographic state, should one exist on the pass-through video link. This allows the NeTV2 to encrypt the Raspberry Pi’s overlay feed so that the Pi’s pixels can be used for a simple “hard overlay” effect. NeTV classic mode thus enables applications such as subtitles and pop-up notifications by throwing away regions of source video and replacing it entirely with overlay pixels. However, a lack of access to unencrypted pixels disallows even basic video effects such as alpha blending or frame scaling.

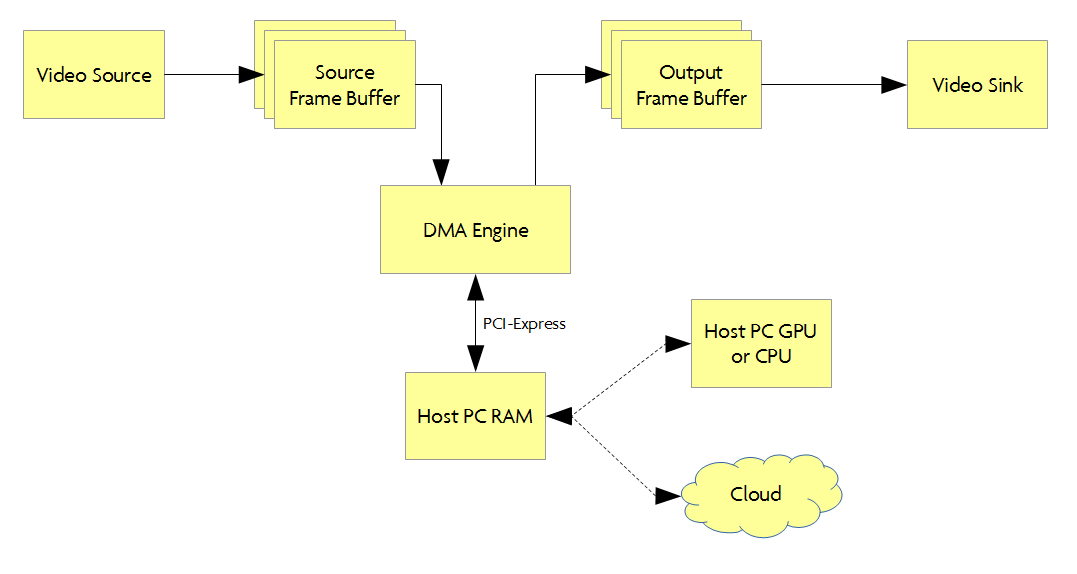

In Libre mode, the board is meant to be plugged into a desktop PC via PCI-express. Libre mode only works with unencrypted video feeds, as the concept here is full video frames are sampled and buffered up inside NeTV2 so that it can be forwarded on to the host PC for further processing. Here, the full power of a GPU or x86 CPU can be applied to extract features and enhance the video, or perhaps portions of the video could even be sent to to the cloud for processing. Once the video has been processed, it is pushed back into the NeTV2 and sent on to the TV for viewing. Libre mode is perhaps the most interesting mode to developers, yet is very limited in every day applications thanks to Section 1201 of the DMCA. Still, it may be possible to craft demos using properly licensed, unencrypted video feeds.

The reference “gateware” (FPGA design) for the NeTV2 is written in Python using migen/LiteX. I previously compared the performance of LiteX to Vivado: for an NeTV2-like reference design, the migen/LiteX version consumes about a quarter the area and compiles in less than a quarter the time – a compelling advantage. migen/LiteX is a true open source framework for describing hardware, which relies on Xilinx’s free-to-download Vivado toolchain for synthesis, place/route, and bitstream generation. There is a significant effort on-going today to port the full open source FPGA backend tools developed by Clifford Wolf from the Lattice ICE40 FPGAs to the same Xilinx 7-series FPGAs used in NeTV2. Of course, designers that prefer to use the Vivado tools to describe and compile their hardware are still free to do so, but I am not officially supporting that design methodology.

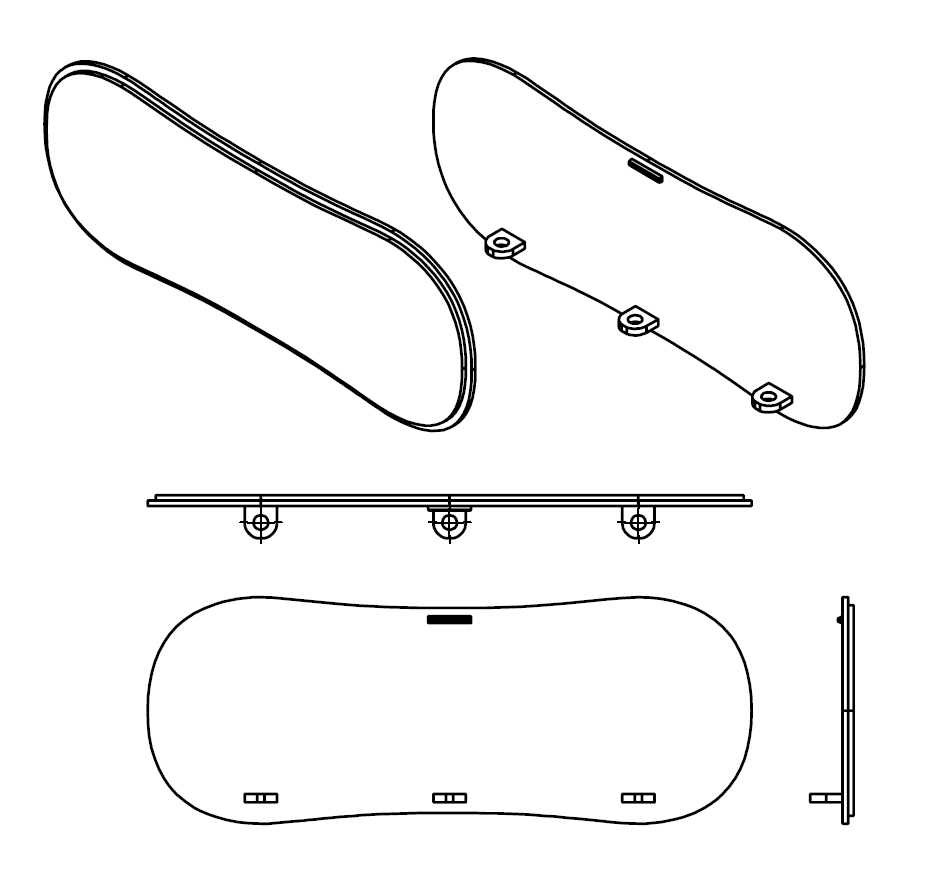

I wanted to narrow the gap between development board and field deployable solution, so I’ve also designed a hackable case for the NeTV2. The case can hold the NeTV2 and a mated Raspberry Pi, and consists of three major parts, a top shell, bottom shell/back bezel, and a stand-alone front bezel. It also has light pipes to route key status LEDs to the plane of the back bezel. It’s designed to be easily disassembled using common screw drivers, and features holes for easy wall-mounting.

Most importantly, the case features extra space with a Peek Array on the inside for mounting your own PCBs or parts, and the front bezel is designed for easier fabrication using either subtractive or additive methodologies. So, if you have a laser cutter, you can custom cut a bezel using a simple, thin sheet of acrylic and slot it into the grooves circumscribing the end of the case. Or, if you have a low-res 3D printer, you can use the screw bosses to attach the bezel instead, and skip the grooves. When you’re ready to step up in volume, you can download the source file for the bezel and make a relatively simple injection mold tool for just the bezel itself (or the whole case, if you really want to!).

The flexibility of the PCI-express edge connector and the simplified bezel allows developers to extend the NeTV2 into a system well beyond the original design intention. Remember, for an FPGA, PCI-express is just a low-cost physical form factor for generic high speed I/O. So, a relatively simple to design and cheap to fabricate adapter card can turn the PCI-express card-edge connector into a variety of high-speed physical standards, including SATA, DisplayPort, USB3.0 and more. There’s also extra low-speed I/O in the header, so you can attach a variety of SPI or I2C peripherals through the same connector. This electrical flexibility, combined with PCBs mounted on the Peek Array and a custom bezel enables developers to build a customer-ready solutions with minimal effort and tooling investment.

The NeTV2 is funding now at Crowd Supply. I’m offering a version with a higher-capacity FPGA only for the duration of the campaign, so if you’re developer be sure to check that out before the campaign ends. If you think that reforming the DMCA is important but the NeTV2 isn’t your cup of tea, please consider supporting the EFF directly with a donation. Together we can reform Section 1201 of the DMCA, and win back fundamental freedoms to express and innovate in the digital age.