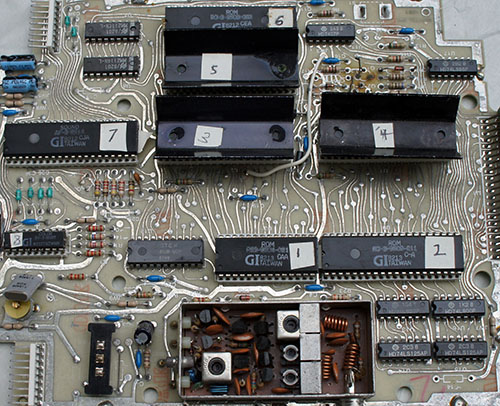

The Ware for January 2021 is a LogiMetrics 921A RF signal generator. Or at least, that’s what the caption says on the picture that was sent to me; the insides don’t quite match up with other photos on the Internet, but it also seems there were a few revisions of the equipment over time, plus various enthusiasts have taken it upon themselves to overhaul their devices before passing them on to a new owner.

I’ll give the prize to Allen Smith for being the first to roughly identify the gear category and vintage. Congrats, email me for your prize!

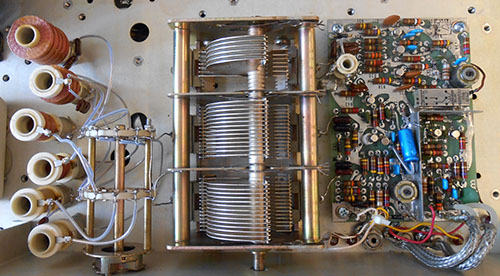

I really love the classic, air-gap variable capacitor on this device. It’s exactly what I would want on hand to illustrate how capacitors work in an entry-level electronics class…if I had an infinite budget.