The new 25% tariffs announced by the USTR, set to go into effect on July 6th, are decidedly anti-Maker and ironically pro-offshoring. I’ve examined the tariff lists (List 1 and List 2), and it taxes the import of basic components, tools and sub-assemblies, while giving fully assembled goods a free pass. The USTR’s press release is careful to mention that the tariffs “do not include goods commonly purchased by American consumers such as cellular telephones or televisions.”

Think about it – big companies with the resources to organize thousands of overseas workers making TVs and cell phones will have their outsourced supply chains protected, but small companies that still assemble valuable goods from basic parts inside the US are about to see significant cost increases. Worse yet educators, already forced to work with a shoe-string budget, are going to return from their summer recess to find that basic parts, tools and components for use in the classroom are now significantly more expensive.

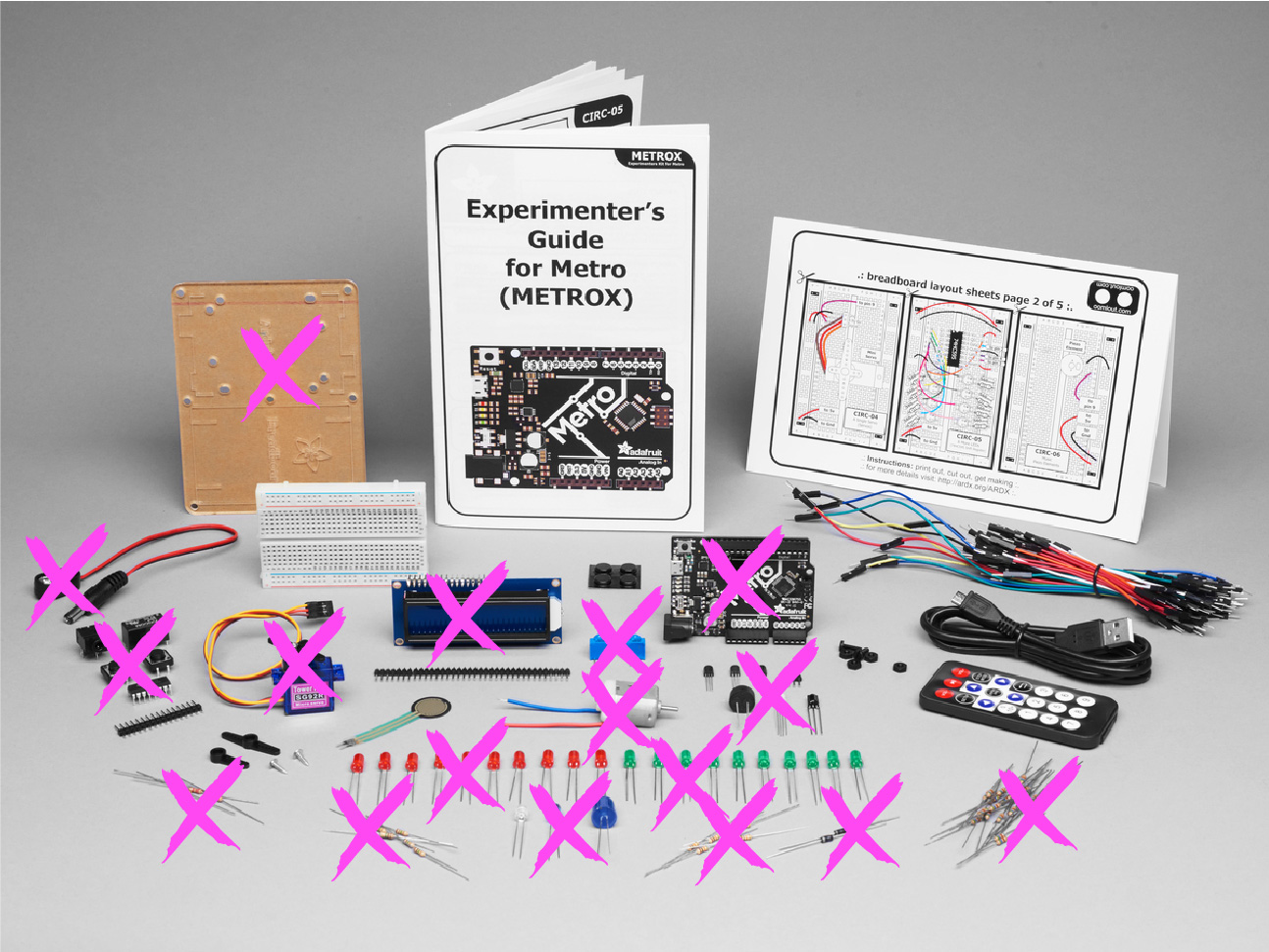

Above: The Adafruit MetroX Classic Kit is representative of a typical electronics education kit. Items marked with an “X” in the above image are potentially impacted by the new USTR tariffs.

New Tariffs Reward Offshoring, Encourage IP Flight

Some of the most compelling jobs to bring back to the US are the so-called “last screw” system integration operations. These often involve the complex and precise process of integrating simple sub-assemblies into high-value goods such as 3D printers or cell phones. Quality control and IP protection are paramount. I often advise startups to consider putting their system integration operations in the US because difficult-to-protect intellectual property, such as firmware, never has to be exported if the firmware upload operation happens in the US. The ability to leverage China for low-value subassemblies opens more headroom to create high-value jobs in the US, improving the overall competitiveness of American companies.

Unfortunately, the structure of the new tariffs are exactly the opposite of what you would expect to bring those jobs back to the US. Stiff new taxes on simple components, sub-assemblies, and tools like soldering irons contrasted against a lack of taxation on finished goods pushes business owners to send these “last screw” operation overseas. Basically, with these new tariffs the more value-add sent outside the borders of the US, the more profitable a business will be. Not even concerns over IP security could overcome a 25% increase in base costs and keep operations in the US.

It seems the intention of the new tariff structure was to minimize the immediate pain that voters would feel in the upcoming mid-terms by waiving taxes on finished goods. Unfortunately, the reality is it gives small businesses that were once considering setting up shop in the US a solid reason to look off-shore, while rewarding large corporations for heavy investments in overseas operations.

New Tariffs Hurt Educators and Makers

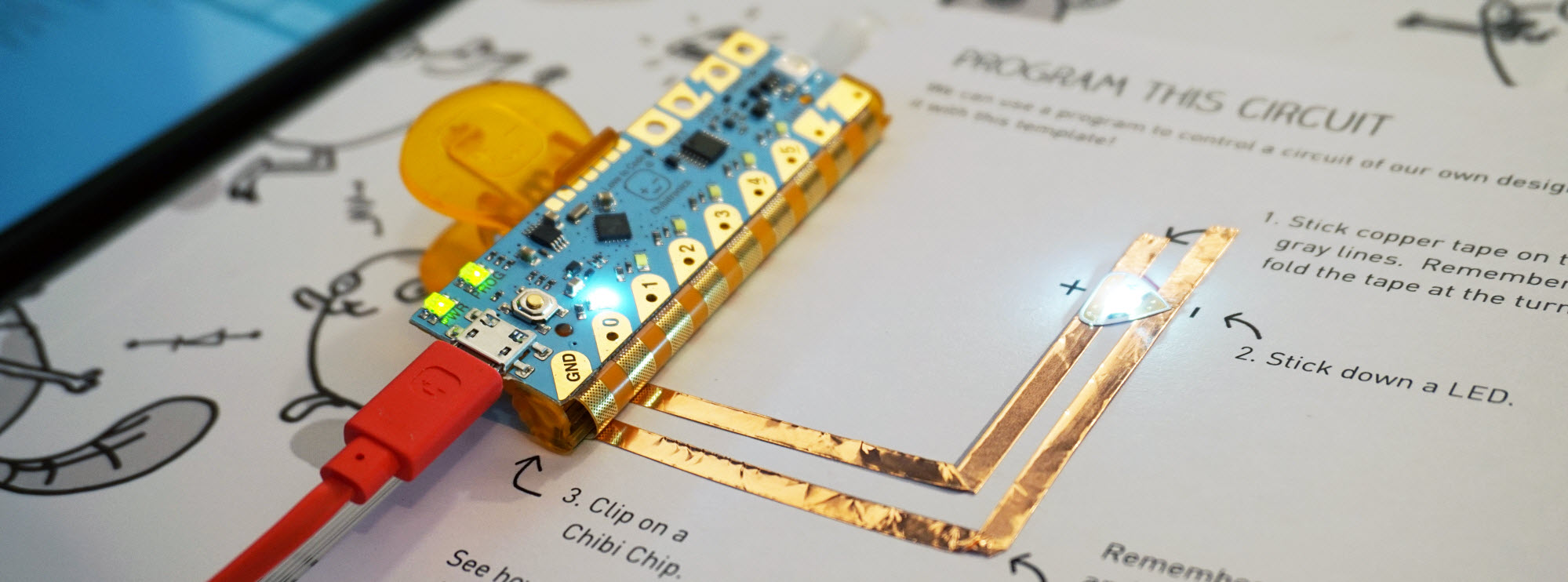

Learning how to blink a light is the de-facto introduction to electronics. This project is often done with the help of a circuit board, such as a Microbit or Chibi Chip, and a type of light known as an LED. Unfortunately, both of those items – simple circuit boards and LEDs – are about to get 25% more expensive with the new tariffs, along with other Maker and educator staples such as capacitors, resistors, soldering irons, and oscilloscopes. The impact of this cost hike will be felt throughout the industry, but most sharply by educators, especially those serving under-funded school districts.

Above: Learning to blink a light is the de-facto introduction to electronics, and it typically involves a circuit board and an LED, like those pictured above.

Somewhere on the Pacific Ocean right now floats a container of goods for ed-tech startup Chibitronics. The goods are slated primarily for educators and Makers that are stocking up for the fall semester. It will arrive in the US the second week of July, and will likely be greeted by a heavy import tax. I know this because I’m directly involved in the startup’s operations. Chibitronics’ core mission is to serve the educator market, and as part of that we routinely offered deep discounts on bulk products for educators and school systems. Now, thanks to the new tariffs on the basic components that educators rely upon to teach electronics, we are less able to fulfill our mission.

A 25% jump in base costs forces us to choose between immediate price increases or cutting the salaries of our American employees who support the educators. These new tariffs are a tax on America’s future – it deprives some of the most vulnerable groups of access to technology education, making future American workers less competitive on the global stage.

Above: Educator-oriented learning kits like the Chibitronics “Love to Code” are slated for price increases this fall due to the new tariffs.

Protectionism is Bad for Technological Leadership

Recently, I was sent photos by Hernandi Krammes of a network card that was manufactured in Brazil around 1992. One of the most striking features of the card was how retro it looked – straight out of the 80’s, a full decade behind its time. This is a result of Brazil’s policy of protectionist tariffs on the import of high-tech components. While stiff tariffs on the import of microchips drove investment in local chip companies, trade barriers meant the local companies didn’t have to be as competitive. With less incentive to re-invest or upgrade, local technology fell behind the curve, leading ultimately to anachronisms like the Brazilian Ethernet card pictured below.

Above: this Brazilian network card from 1992 features design techniques from the early 80’s. It is large and clunky compared to contemporaneous cards.

Significantly, it’s not that the Brazilian engineers were any less clever than their Western counterparts: they displayed considerable ingenuity getting a network card to work at all using primarily domestically-produced components. The tragedy is instead of using their brainpower to create industry-leading technology, most of their effort went into playing catch-up with the rest of the world. By the time protectionist policies were repealed in Brazil, the local industry was too far behind to effectively compete on a global scale.

Should the US follow Brazil’s protectionist stance on trade, it’s conceivable that some day I might be remarking on the quaintness of American network cards compared to their more advanced Chinese or European counterparts. Trade barriers don’t make a country more competitive – in fact, quite the opposite. In a competition of ideas, you want to start with the best tech available anywhere; otherwise, you’re still jogging to the starting line while the competition has already finished their first lap.

Stand Up and Be Heard

There is a sliver of good news in all of this for American Makers. The list of commodities targeted in the trade war is not yet complete. The “List 2” items – which include all manner of microchips, motors, and plastics (such as 3D printer PLA filament and acrylic sheets for laser cutting) that are building blocks for small businesses and Makers – have yet to be ratified. The USTR website has indicated in the coming weeks they will disclose a process for public review and comment. Once this process is made transparent – whether you are a small business owner or the parent of a child with technical aspirations – I encourage you to please share your stories and concerns on how you will be negatively impacted by these additional tariffs.

Some of the List 2 items still under review include:

| 9030.31.00 |

Multimeters for measuring or checking electrical voltage, current, resistance or power, without a recording device |

| 8541.10.00 |

Diodes, other than photosensitive or light-emitting diodes |

| 8541.40.60 |

Diodes for semiconductor devices, other than light-emitting diodes, nesoi |

| 8542.31.00 |

Electronic integrated circuits: processors and controllers |

| 8542.32.00 |

Electronic integrated circuits: memories |

| 8542.33.00 |

Electronic integrated circuits: amplifiers |

| 8542.39.00 |

Electronic integrated circuits: other |

| 8542.90.00 |

Parts of electronic integrated circuits and microassemblies |

| 8501.10.20 |

Electric motors of an output of under 18.65 W, synchronous, valued not over $4 each |

| 8501.10.60 |

Electric motors of an output of 18.65 W or more but not exceeding 37.5 W |

| 8501.31.40 |

DC motors, nesoi, of an output exceeding 74.6 W but not exceeding 735 W |

| 8544.49.10 |

Insulated electric conductors of a kind used for telecommunications, for a voltage not exceeding 80 V, not fitted with connectors |

| 8544.49.20 |

Insulated electric conductors nesoi, for a voltage not exceeding 80 V, not fitted with connectors |

| 3920.59.80 |

Plates, sheets, film, etc, noncellular, not reinforced, laminated, combined, of other acrylic polymers, nesoi |

| 3916.90.30 |

Monafilament nesoi, of plastics, excluding ethylene, vinyl chloride and acrylic polymers |

Here’s some of the “List 1” items that are set to become 25% more expensive to import from China, come July 6th:

Staples used by every Maker or electronics educator:

| 8515.11.00 |

Electric soldering irons and guns |

| 8506.50.00 |

Lithium primary cells and primary batteries |

| 8506.60.00 |

Air-zinc primary cells and primary batteries |

| 9030.20.05 |

Oscilloscopes and oscillographs, specially designed for telecommunications |

| 9030.33.34 |

Resistance measuring instruments |

| 9030.33.38 |

Other instruments and apparatus, nesoi, for measuring or checking electrical voltage, current, resistance or power, without a recording device |

| 9030.39.01 |

Instruments and apparatus, nesoi, for measuring or checking |

Circuit assemblies (like Microbit, Chibi Chip, Arduino):

| 8543.90.68 |

Printed circuit assemblies of electrical machines and apparatus, having individual functions, nesoi |

| 9030.90.68 |

Printed circuit assemblies, NESOI |

Basic electronic components:

| 8532.21.00 |

Tantalum fixed capacitors |

| 8532.22.00 |

Aluminum electrolytic fixed capacitors |

| 8532.23.00 |

Ceramic dielectric fixed capacitors, single layer |

| 8532.24.00 |

Ceramic dielectric fixed capacitors, multilayer |

| 8532.25.00 |

Dielectric fixed capacitors of paper or plastics |

| 8532.29.00 |

Fixed electrical capacitors, nesoi |

| 8532.30.00 |

Variable or adjustable (pre-set) electrical capacitors |

| 8532.90.00 |

Parts of electrical capacitors, fixed, variable or adjustable (pre-set) |

| 8533.10.00 |

Electrical fixed carbon resistors, composition or film types |

| 8533.21.00 |

Electrical fixed resistors, other than composition or film type carbon resistors, for a power handling capacity not exceeding 20 W |

| 8533.29.00 |

Electrical fixed resistors, other than composition or film type carbon resistors, for a power handling capacity exceeding 20 W |

| 8533.31.00 |

Electrical wirewound variable resistors, including rheostats and potentiometers, for a power handling capacity not exceeding 20 W |

| 8533.40.40 |

Metal oxide resistors |

| 8533.40.80 |

Electrical variable resistors, other than wirewound, including rheostats and potentiometers |

| 8533.90.80 |

Other parts of electrical resistors, including rheostats and potentiometers, nesoi |

| 8541.21.00 |

Transistors, other than photosensitive transistors, with a dissipation rating of less than 1 W |

| 8541.29.00 |

Transistors, other than photosensitive transistors, with a dissipation rating of 1 W or more |

| 8541.30.00 |

Thyristors, diacs and triacs, other than photosensitive devices |

| 8541.40.20 |

Light-emitting diodes (LED’s) |

| 8541.40.70 |

Photosensitive transistors |

| 8541.40.80 |

Photosensitive semiconductor devices nesoi, optical coupled isolators |

| 8541.40.95 |

Photosensitive semiconductor devices nesoi, other |

| 8541.50.00 |

Semiconductor devices other than photosensitive semiconductor devices, nesoi |

| 8541.60.00 |

Mounted piezoelectric crystals |

| 8541.90.00 |

Parts of diodes, transistors, similar semiconductor devices, photosensitive semiconductor devices, LED’s and mounted piezoelectric crystals |

| 8504.90.75 |

Printed circuit assemblies of electrical transformers, static converters and inductors, nesoi |

| 8504.90.96 |

Parts (other than printed circuit assemblies) of electrical transformers, static converters and inductors |

| 8536.50.90 |

Switches nesoi, for switching or making connections to or in electrical circuits, for a voltage not exceeding 1,000 V |

| 8536.69.40 |

Connectors: coaxial, cylindrical multicontact, rack and panel, printed circuit, ribbon or flat cable, for a voltage not exceeding 1,000 V |

| 8544.49.30 |

Insulated electric conductors nesoi, of copper, for a voltage not exceeding 1,000 V, not fitted with connectors |

| 8544.49.90 |

Insulated electric conductors nesoi, not of copper, for a voltage not exceeding 1,000 V, not fitted with connectors |

| 8544.60.20 |

Insulated electric conductors nesoi, for a voltage exceeding 1,000 V, fitted with connectors |

| 8544.60.40 |

Insulated electric conductors nesoi, of copper, for a voltage exceeding 1,000 V, not fitted with connectors |

Parts to fix your phone if it breaks:

| 8537.10.80 |

Touch screens without display capabilities for incorporation in apparatus having a display |

| 9033.00.30 |

Touch screens without display capabilities for incorporation in apparatus having a display |

| 9013.80.70 |

Liquid crystal and other optical flat panel displays other than for articles of heading 8528, nesoi |

| 9033.00.20 |

LEDs for backlighting of LCDs |

| 8504.90.65 |

Printed circuit assemblies of the goods of subheading 8504.40 or 8504.50 for telecommunication apparatus |

Power supplies:

| 9032.89.60 |

Automatic regulating or controlling instruments and apparatus, nesoi |

| 9032.90.21 |

Parts and accessories of automatic voltage and voltage-current regulators designed for use in a 6, 12, or 24 V system, nesoi |

| 9032.90.41 |

Parts and accessories of automatic voltage and voltage-current regulators, not designed for use in a 6, 12, or 24 V system, nesoi |

| 9032.90.61 |

Parts and accessories for automatic regulating or controlling instruments and apparatus, nesoi |

| 8504.90.41 |

Parts of power supplies (other than printed circuit assemblies) for automatic data processing machines or units thereof of heading 8471 |